How to make Hatsune Miku NT1.0 sing in somewhat fluent english

BEFORE YOU START

VOWEL CONVERSION

| [Phoneme(X-SAMPA/ARPABET)] |

[Conversion] | |

|---|---|---|

| [V]/[ah] | [M][a ] | |

| [e]/[eh] | [e] | |

| [I]/[ih] | [i][e ] | |

| [i:]/[iy] | [i ][e] | |

| [{]/[ae] | [e ][a] | |

| [O:]/[ao] | [o][a ] | |

| [Q]/[aa] | [o][a ] | |

| [U]/[uh] | [M ][e] | |

| [u:]/[uw] | [M] | |

| [@r]/[er] | [a ][M] | |

| [eI]/[ey] | [e ][i] | |

| [aI]/[ay] | [a ][e] | |

| [OI]/[oy] | [o ][i] | |

| [@U]/[ow] | [a][o ][M] | |

| [@r]/[er] | [a ][M] | |

| [I@]/[iy r] | [i ][a] | |

| [e@]/[eh r] | [e ][M] | |

| [O@]/[ao r] | [o][a ][M] | |

| [Q@]/[aa r] | [o][a ][M] |

CONSONANT CONVERSION

Consonants are thankfully a little less involved but if you want to get more detailed with this section you can. I'll be providing a basic chart for phoneme conversions but none of these are concrete and can definitely change with context, but if I were to cover every possible consonant combination this page would be a mile long ^^.

I've left out phonemes that are the same across both phonetic systems and don't need to be edited or replaced.

| [Phoneme(X-SAMPA/ARPABET)] |

[Conversion] |

|---|---|

| [r]/[r] | [w] |

| [l0]/[l] | [4] |

| [v]/[v] | [m] |

| [T]/[th] | [s] |

| [D]/[dh] | [4] |

| [Z]/[zh] | [S] |

| [t]/[t] | [ts] |

| [d]/[d] | [dz] |

SPLICING

For this section you'll need to find a feminine vocalsynth or a real vocalist that's more fluent in English than Hatsune Miku is that also won't sound out of place if you splice parts of them into her. I'll be using RX Audio Editor for this section.

For vowel and voiced consonant splicing, both your original .ppsf file and whatever file you use for this new vocal will need to be tuned exactly the same way, or none of this will blend together properly. What we're doing here is essentially an auditory illusion, we want to take just enough out of a secondary vocal to support pronunciation, but not so much that it distracts from the base tone of Hatsune Miku NT.

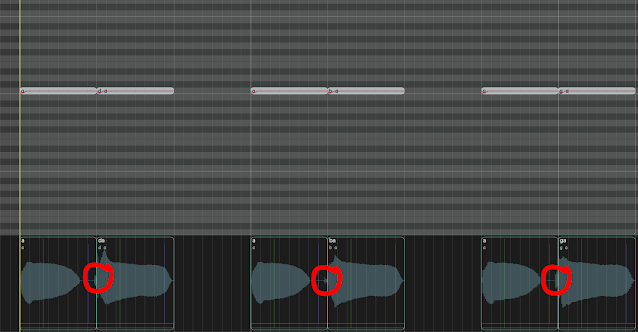

In RX Audio editor, open up your Hatsune Miku NT render, Normalize her to -1.0dB and go to the section that needs vowel splicing.

Next, open up your secondary vocalist that you're gonna splice from. Normalize this to -1.0dB. This can be literally any engine or vocalist as long as the tone is somewhat Miku-like. We're gonna try to preserve as much of the Piapro NT engine noise as we can here by only selecting a small portion of the vowel. (try to only take 3 or 4 lines from the bottom of the spectrogram or the illusion will be ruined)

For most of consonants, you can just take them directly from the secondary vocalist like this. But be wary of voiced consonants and try not to use too many of them.

Once you've done all this you should have an output that sounds pretty presentable. Finish adding any final touches and you're done!

Comments

Post a Comment